Recently, deep generative adversarial networks for image generation have advanced rapidly; yet, only a small amount of research has focused on generative models for irregular structures, particularly meshes. Nonetheless, mesh generation and synthesis remains a fundamental topic in computer graphics. In this work, we propose a novel framework for synthesizing geometric textures. It learns geometric texture statistics from local neighborhoods (i.e., local triangular patches) of a single reference 3D model. It learns deep features on the faces of the input triangulation, which is used to subdivide and generate offsets across multiple scales, without parameterization of the reference or target mesh. Our network displaces mesh vertices in any direction (i.e., in the normal and tangential direction), enabling synthesis of geometric textures, which cannot be expressed by a simple 2D displacement map. Learning and synthesizing on local geometric patches enables a genus-oblivious framework, facilitating texture transfer between shapes of different genus.

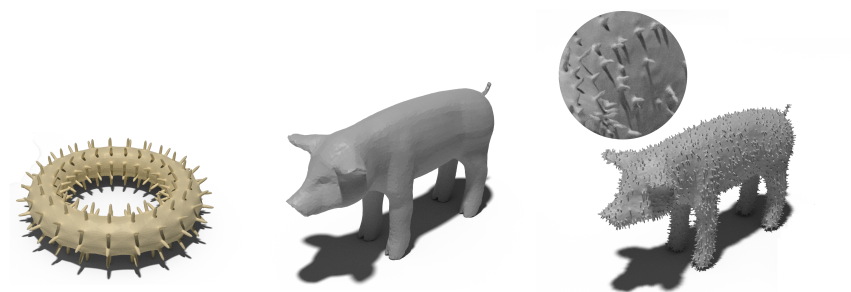

The general idea is we are given a single 3D exemplar model which contains some geometric texture (left). We would like to train a neural network to automatically understand and then synthesize the exemplar’s geometric texture. Then, given a different (target) shape (middle), synthesize the geometric textures from the exemplar over the target shape (right).

This work has three main components: (1) a preliminary optimization phase for generating training data, (2) progressive training and (3) inference. Each phase is summarized below.

This work has three main components: (1) a preliminary optimization phase for generating training data, (2) progressive training and (3) inference. Each phase is summarized below.

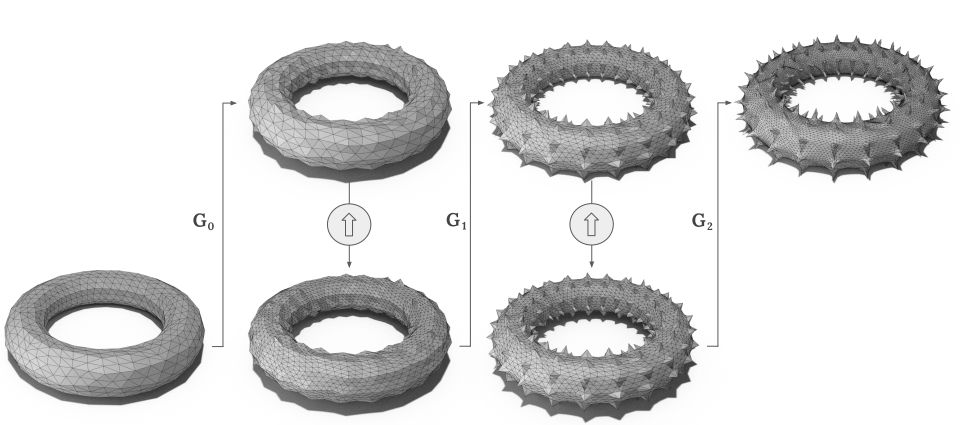

The objective is to learn the unknown texture statistics from local triangular patches. Starting with the base mesh (left), the first level generator (G0) learns deep features on the faces of the input triangulation, which is used to generate vertex displacements. This output is upsampled (aka subdivided), before going to the next level generator which displaces vertices. This is repeated until reaching the finest level in the series.

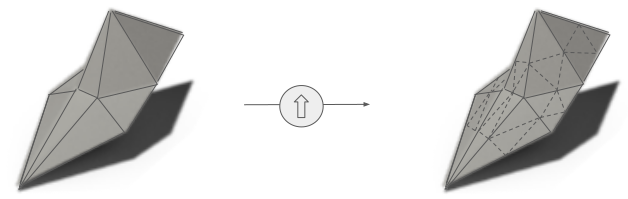

In this phase, we create a series of progressive geometric textures via an offline optimization procedure for the single 3D exemplar (right).

These shapes will be used as supervision to train the series of progressive generators. Starting with a low-resolution base shape (left), we minimize the distance to the exemplar by displacing the vertices of the mesh. Then we increase the resolution via a midpoint-subdivision, and re-optimize.

These shapes will be used as supervision to train the series of progressive generators. Starting with a low-resolution base shape (left), we minimize the distance to the exemplar by displacing the vertices of the mesh. Then we increase the resolution via a midpoint-subdivision, and re-optimize.

After training is complete, we can use the series of progressive generators to synthesize progressive geometric textures on novel (unseen) target shapes during training.